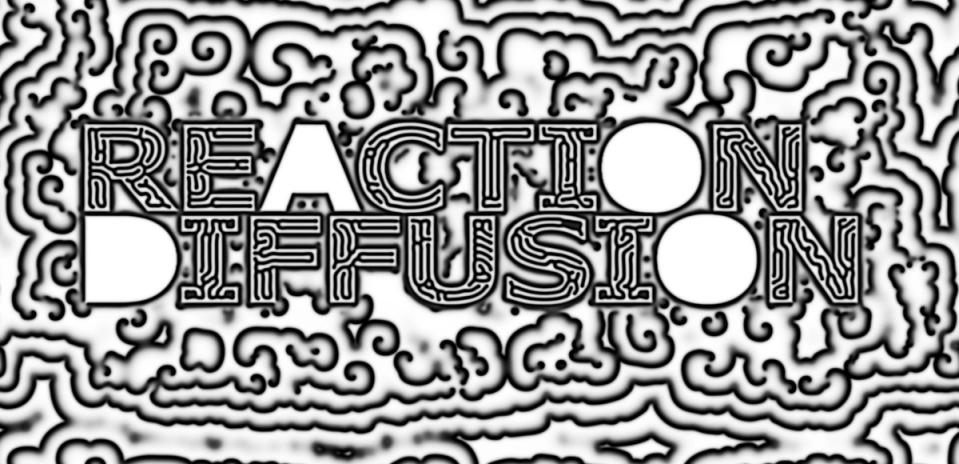

Reaction-Diffusion Playground

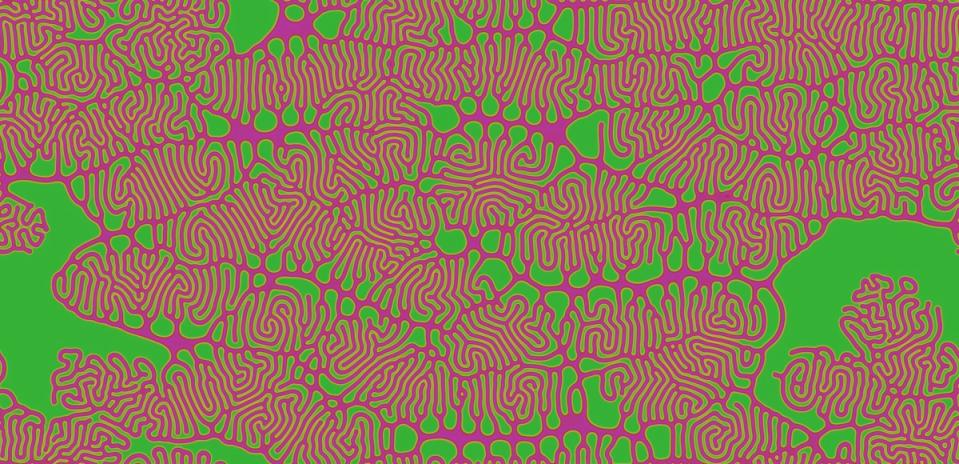

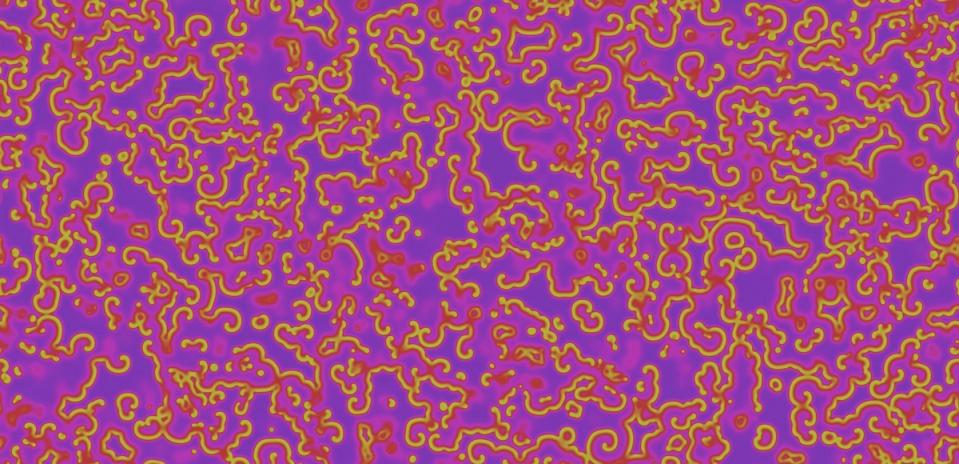

Reaction-diffusion is a mathematical model describing how two chemicals might react to each other as they diffuse through a medium together. It was proposed by Alan Turing in 1952 as a possible explanation for how the interesting patterns of stripes and spots that are seen on the skin/fur of animals like giraffes and leopards form.

When run at large scales and high speeds, reaction-diffusion systems can produce an amazing variety of dynamic, mesmerizing patterns and behaviors. In the time since Turing published his original paper on the topic, reaction-diffusion has been studied extensively by researchers in biology, chemistry, physics, computer science, and other fields. Today it has become accepted as a plausible (some say proven) explanation for the formation of patterns such as zebrafish pigmentation, hair follicle spacing, the Belousov-Zhabotinsky (BZ) chemical reaction, certain types of coral, zebra and tiger stripes, and more.

Features

My implementation is informed heavily by the work of many other people and projects, all of which are cataloged in the Github repo. I began by thoroughly reading Alan Turing’s original paper, along with the seminal works of Karl Sims and Robert Munafo, all of which provided critical insight into the possibilities of various parameters and modes of operation not commonly seen in most implementations in the creative coding space.

On the coding side, I would also like to credit pmneila, Red Blob Games, Ken Voskuil, and Jonathan Cole for sharing their source code, as their projects in particular helped me work through specific coding challenges like rendering using custom shaders in ThreeJS and achieving different visual effects.

In the Reaction-Diffusion Playground you will find:

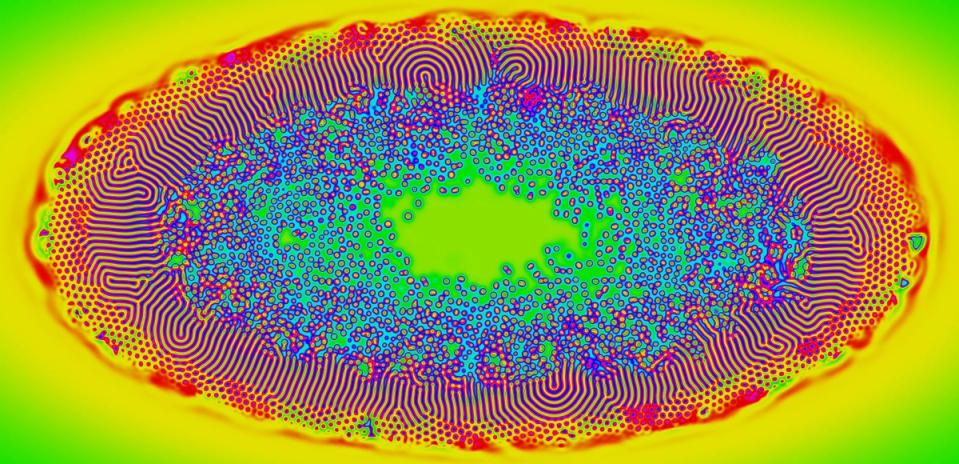

- Interactive parameter map for quickly jumping to interesting areas of the parameter space just by clicking.

- Basic MIDI controller support for all parameters.

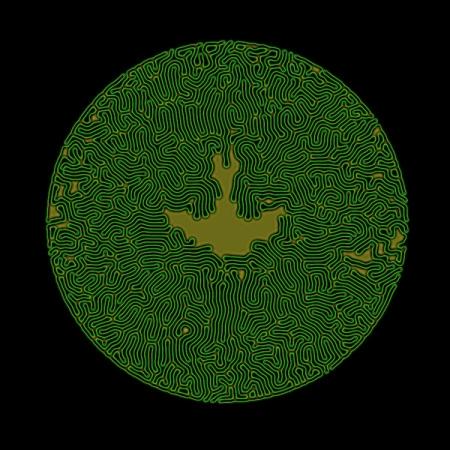

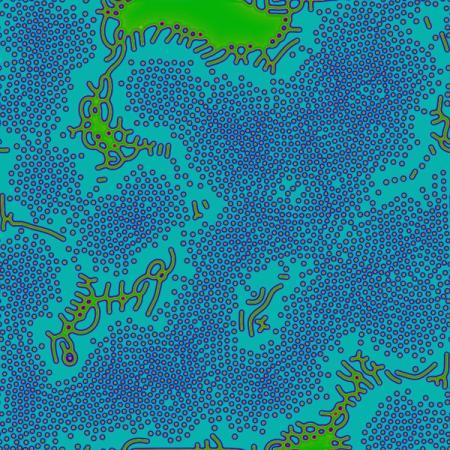

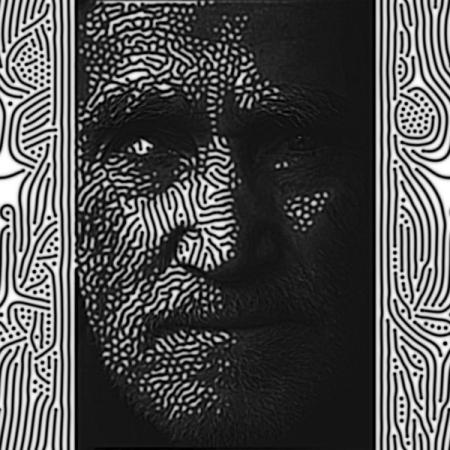

- Upload an image to use as a “style map”, which interpolates equation parameters between two user-defined values based on pixel brightness. The style map image can be translated, scaled, and rotated too!

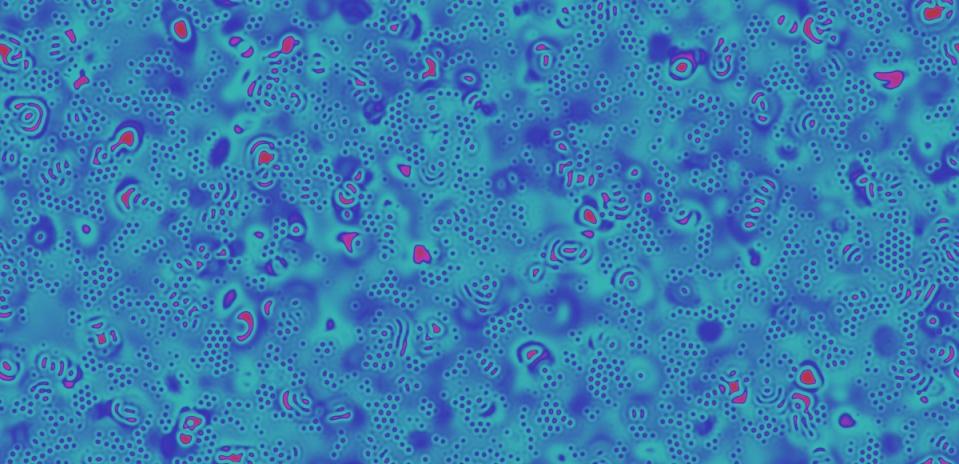

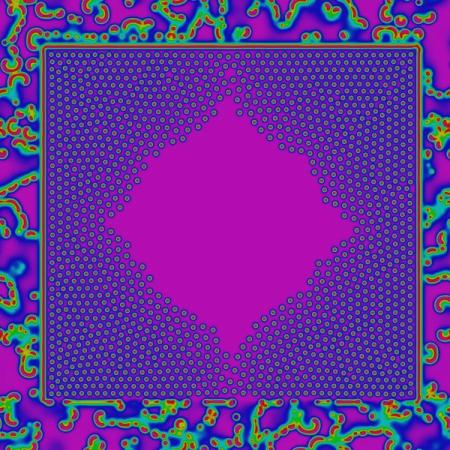

- Multiple rendering styles, including HSL mapping, gradient mapping, grayscale, and a number of styles based on Red Blob Games’ implementation.

- Configurable seed patterns including a circle, rectangle, text, or an uploaded image.

- Support for diffusion bias, meaning diffusion can occur faster in one direction than in others (achieved through directional weighting applied to the Laplacian stencil).

- A large collection of interesting parameter presets from Robert Munafo’s WebGL Gray-Scott Explorer.